The Secret Of Info About How To Handle Large Database

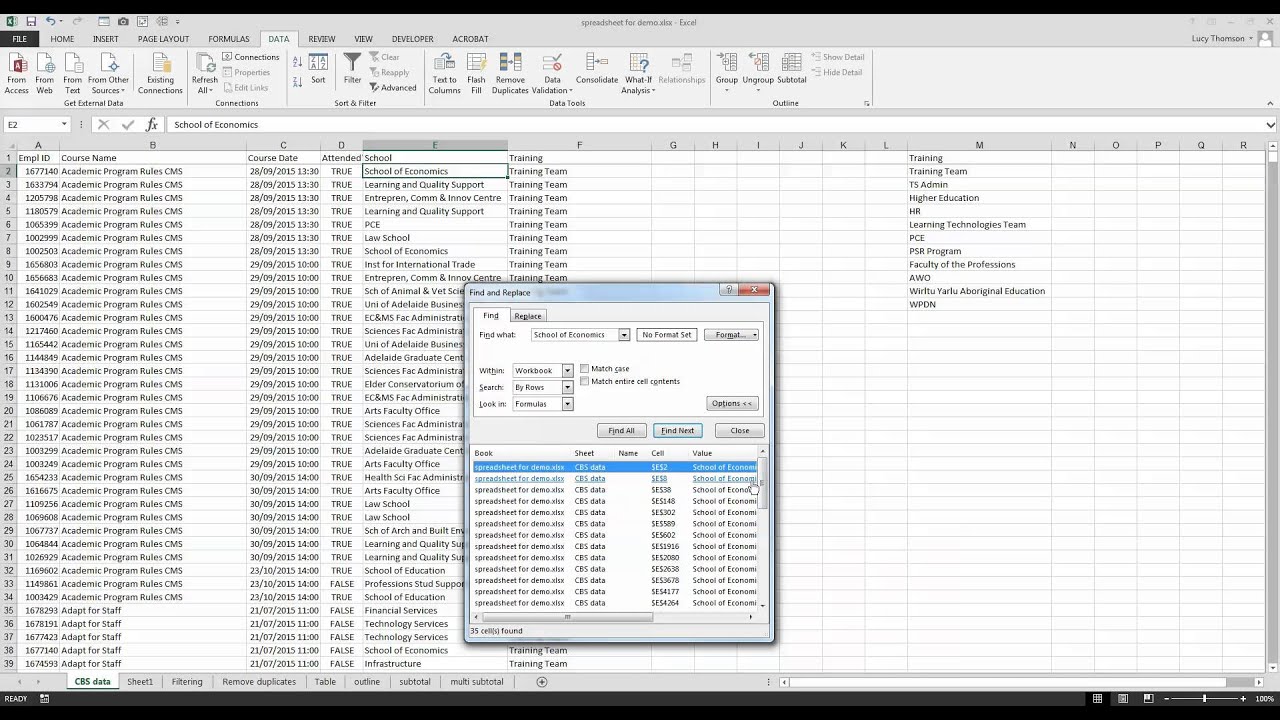

On the “import” tab, you will see a number of options for importing.

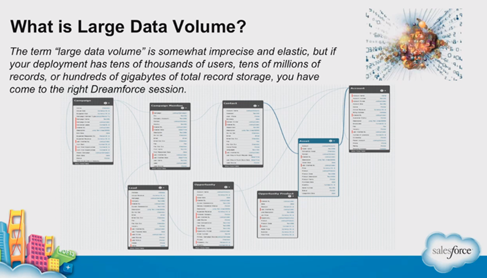

How to handle large database. For large excel files, i recommend you to use the app integration excel activities (those that require an excel application scope). 7 ways to handle large data files for machine learning photo by gareth thompson, some rights reserved. Simply change servers or hosting providers that can handle a large database.

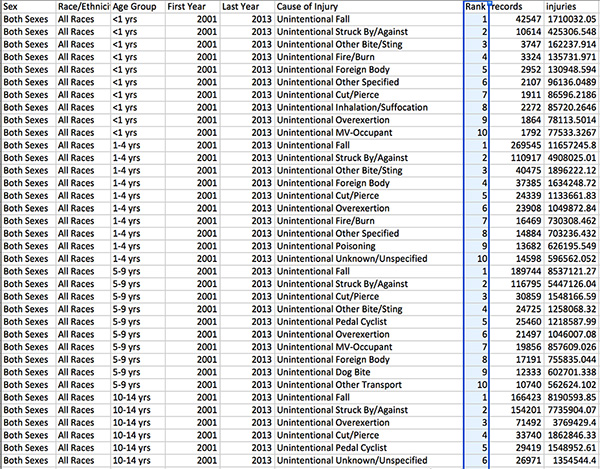

I have a table which has round 6,00,000 records. Within a few hours of work,. How to create consistency in large data management.

The size of the database matters as it has an impact on performance and its management. In the preview dialog box, select load to. Needless to say, the performance has decayed.

Using a matlab script, you can import data in increments until all data is retrieved. Open a blank workbook in excel. The historical (but perfectly valid) approach to handling large volumes of data is to implement partitioning.

Once the database has been selected, click on the “import” tab located at the top of the phpmyadmin interface. Keep the raw data in a file if you think you need to get back. What could be best practices to handle this.

I have a database with over million users, each user has enormous amount of data stored. 1.create a structure for your client’s data. When collecting billions of rows, it is better (when possible) to consolidate, process, summarize, whatever, the data before storing.